I recently wrote about the

Cos method. While rereading the various papers on Heston semi-analytical pricing, especially the

nice summary by Schmelzle, it struck me how close were the Attari/Bates methods and the Cos method derivations. I then started wondering if Attari was really much worse than the Cos method or not.

I noticed that Attari method accuracy is directly linked to the underlying Gaussian quadrature method accuracy. I found that the doubly adaptive Newton-Cotes quadrature by Espelid (coteda) was the most accurate/fastest on this problem (compared to Gauss-Laguerre/Legendre/Extrapolated Simpson/Lobatto). If the accuracy of the integration is 1e-6, Attari maximum accuracy will also be 1E-6, this means that very out of the money options will be completely mispriced (might even be negative). In a sense it is similar to what I observed on the Cos method.

"Lord-Kahl" uses 1e-4 integration accuracy, "Attari" uses 1E-6, and "Cos" uses 128 points. The reference is computed using

Lord-Kahl with Newton-Cotes & 1E-10 integration accuracy.

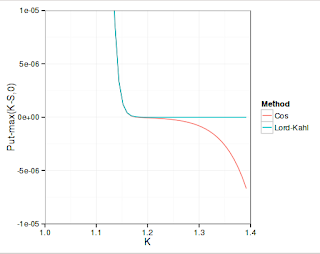

Well here are the results in terms of accuracy:

As expected, Lord-Kahl absolute accuracy is only 1E-5 (a bit better than 1E-4 integration accuracy), while Attari is a bit better than 1E-6, and Cos is nearly 1E-7 (higher inaccuracy in the high strikes, probably because of the truncation inherent in the Cos method).

The relative error tells a different story, Lord-Kahl is 1E-4 accurate here, over the full range of strikes. It is the only method to be accurate for very out of the money options: the

optimal alpha allows to go beyond machine epsilon without problems. The Cos method can only go to absolute accuracy of around 5E-10 and will oscillate around, while the reference prices can be as low as 1E-25. Similarly Attari method will oscillate around 5E-8.

What's interesting is how much time it takes to price 1000 options of various strikes and same maturity. In Attari, the charateristic function is cached.

Cos 0.012s

Lord-Kahl 0.099s

Attari 0.086s

Reference 0.682s

The Cos method is around 7x faster than Attari, for a higher accuracy. Lord-Kahl is almost 8x slower than Cos, which is still quite impressive given that here, the characteristic function is not cached, plus it can price very OTM options while following a more useful relative accuracy measure. When pricing 10 options only, Lord-Kahl becomes faster than Attari, but Cos is still faster by a factor of 3 to 5.

It's also quite impressive that on my small laptop I can price nearly 100K options per second with Heston.